Today we are releasing RedBPF and ingraind, our eBPF toolkit that integrates with StatsD and S3, to gather feedback, and see where others in the Rust community might take this framework. If you are looking to up your company’s monitoring game, gather more data about your Raspberry Pi cluster at home, or just have a strong academic interest in Rust and low-level bit shepherding, you might want to read on.

At Red Sift we run our own Kubernetes cluster and our own data processing pipeline on top of it. We’re dealing with a whole lot of email and wanted a more in-depth understanding of the communication patterns of our own systems. This type of data ultimately helps us develop a baseline and notice any irregularities that might indicate a security incident or malfunction.

RedBPF is a Rust library for eBPF, a flexible VM subsystem inside the Linux kernel. Using RedBPF, we built ingraind, a metric collector agent we can distribute as a single binary and a system-specific config file.

Existing eBPF solutions tend to focus primarily on performance and debugging, but we wanted to see if eBPF can help us understand our security profile better.

We started off with 4 primary things we want to know about: DNS activity and TLS connection details without having to resort to port filtering, UDP/TCP traffic volume per process, and file access patterns.

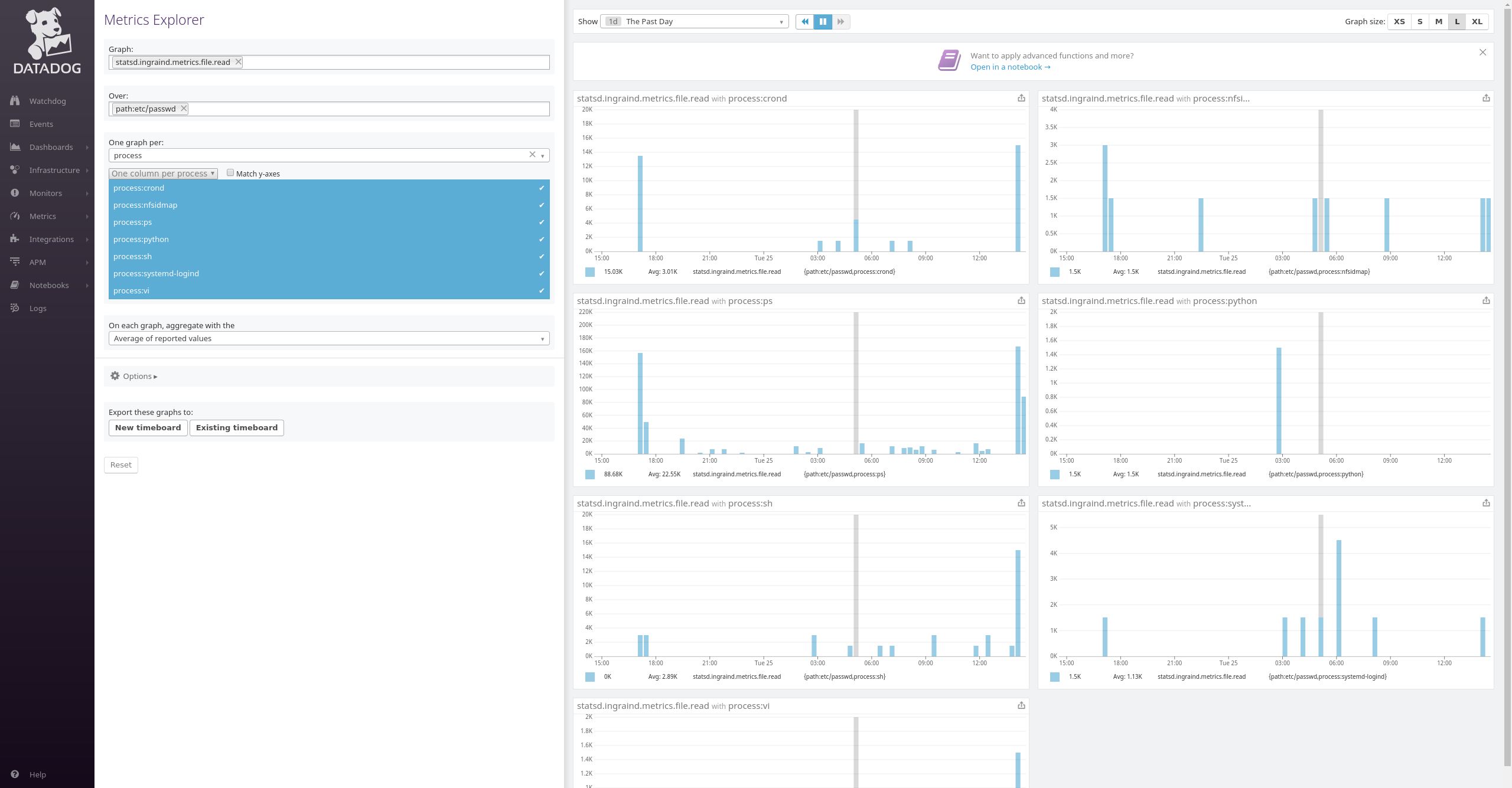

Since we’re already using Datadog for performance monitoring, it seemed logical to expand our dashboards with security-related metrics using a relatively lightweight agent that plugs easily into a StatsD backend.

eBPF

A relatively new subsystem in the Linux kernel, eBPF is making waves across the industry, and for good reasons. It’s at the

eBPF is a virtual machine within the kernel that allows hooking up the network interfaces, or syscalls, or even specific in-kernel functions behind the syscall gate. At the moment of writing, there are around 200-odd calls that we can monitor using tiny programs to get deep intelligence from the Linux kernel.

Due to its flexibility, people are using eBPF modules for various things: packet filtering, software defined networks, performance monitoring, or generally extracting real-time diagnostics to better understand a system.

However, because space and attention span in a technical blog post is finite, for a technical dive into eBPF, check out the wiki page we just opened up, or the amazing

Enter Rust

Most of the existing eBPF ecosystem is based on BCC, which has convenient Python and Go bindings. However, we wanted something that doesn’t need a full-blown compiler toolchain and kernel sources on the target box, so using the traditional BCC workflow was out of question, and its convenient bindings along with it.

Our self-imposed limitation left us with one option: GoBPF has support for bringing your own bytecode, and some great software already

Due to Go’s threading model, however, it can’t match Rust’s performance doing C FFI. Interacting with eBPF modules generally involves a lot of low level memory management, so this was important. We wanted to run ingraind in production, so it shouldn’t consume more resources than absolutely necessary. It seemed like building our own Rust BPF framework was a solid choice.

RedBPF

When dealing with eBPF modules in the kernel, there are a lot of intricacies to which one has to pay attention, and one needs to dig through a lot of code to figure out how it’s done. BCC is still the most comprehensive suite that deals with eBPF. GoBPF and a few other libraries started diverging, while maintaining some resemblance, and simultaneously adding features that can’t be found elsewhere.

I strongly consider this an anti-pattern: because it’s not a straight-up fork, but a semi-port, fixes and new features are increasingly difficult to integrate from the original code base. While rule #1 of the Linux kernel development is “You don’t break userland”, in practice it just means that legacy systems are kept around while new, preferred ways of achieving the same results are added.

To get the best of both worlds, we chose to go with a mixed approach: re-use whatever we can from BCC’s libbpf component, an amazing library that can be statically linked and exclusively deals with the runtime management of eBPF modules, and implement high-level bindings, ELF parsing, and other workflow-specific code in Rust.

The end result is RedBPF, a library that’s highly focused on providing low-friction, idiomatic bindings for libbpf, consuming perf events, and provides a build-load-run workflow for eBPF while keeping the workflow not too opinionated. Let’s walk through the details!

Building modules

If the build feature is enabled, RedBPF provides a toolkit for building C code into eBPF modules, generating Rust bindings from C header files through Bindgen, and using a build cache to speed things up when all of this is used through build.rs.

fn main() -> Result<(), Error> {

let out_dir = PathBuf::from(env::var(“OUT_DIR”)?);

// generate the build & bindgen flags here. skipped for brevity.

let mut cache = BuildCache::new(&out_dir);

for file in source_files(“./bpf”, “c”)? {

if cache.file_changed(&file) {

build(&flags[..], &out_dir, &file).expect(“Failed building BPF plugin!”);

}

}

for file in source_files(“./bpf”, “h”)? {

if cache.file_changed(&file) {

generate_bindings(&bindgen_flags[..], &out_dir, &file)

.expect(“Failed generating data bindings!”);

}

}

cache.save();

Ok(())

}

[/rust]

Generating the Rust bindings for in-kernel data structures currently depends on structs having a definition matching a regex struct _data_[^{}]* which is not incredibly sophisticated, but worked well enough so far. One advantage of this approach is that data structures that are sent to userland are clearly marked in the C BPF code at every use.

// the build step generates `$OUT_DIR/connection.rs`

struct _data_connect {

u64 id;

u64 ts;

char comm[TASK_COMM_LEN];

u32 saddr;

u32 daddr;

u16 dport;

u16 sport;

};

[/c]

In ingraind we read the raw data from a perf event stream, and convert it to a safe, high-level structure. Technically, there’s still a fair bit of repetition in this process, something that may be optimized further with a few clever procedural macros.

[rust] include!(concat!(env!(“OUT_DIR”), “/connection.rs”));#[derive(Debug, Serialize, Deserialize)] pub struct Connection {

pub task_id: u64,

pub name: String,

pub destination_ip: Ipv4Addr,

pub destination_port: u16,

pub source_ip: Ipv4Addr,

pub source_port: u16,

pub proto: String,

}

impl From<_data_connect> for Connection {

fn from(data: _data_connect) -> Connection {

Connection {

task_id: data.id,

name: to_string(unsafe { &*(&data.comm as *const [i8] as *const [u8]) }),

source_ip: to_ipv4(data.saddr),

destination_ip: to_ipv4(data.daddr),

destination_port: to_le(data.dport),

source_port: to_le(data.sport),

proto: “”.to_string(),

}

}

}

[/rust]

While there could be multiple ways of distributing the resulting eBPF bytecode, we chose to include it in the main binary for simplicity of distribution.

[rust] impl EBPFGrain<‘static> for UDP {fn code() -> &’static [u8] {

include_bytes!(concat!(env!(“OUT_DIR”), “/udp.elf”))

}

…

}

[/rust]

One thing that’s important to note here is that the resulting binary may be kernel-dependent. Although the kernel ABI is unstable, certain parts of it change rarely. Another consideration is RANDSTRUCT, or struct layout randomization. This is a hardening feature that mangles the layout of marked structs inside the kernel to make exploitation more difficult. Distribution kernels often have this disabled, but if you build your own kernel, make sure you aren’t surprised.

Loading and maps

Without the build feature, RedBPF is runtime-only. It reads and parses ELF that contains eBPF bytecode, returns with the list of components in the object file, and provides bindings to manage programs, use BPF maps, consume perf events, and some other related utilities where we did not want to introduce another dependency, like uname, or parsing the IDs of online processors.

After loading a module, all BPF maps are automatically initialized, so we only need to deal with programs.

[rust] use redbpf::Module;let mut module = Module::parse(Self::code())?;

for prog in module.programs.iter_mut() {

prog.load(module.version, module.license.clone()).unwrap();

}

[/rust]

Because a BPF program can be of many types, attaching these correctly is up to the user. One way of attaching all XDP filters would be like so. Notice the unwrap: it is deliberately crashing the program if the load failed for any reason.

[rust] use redbpf::ProgramKind::*;for prog in self

.module

.programs

.iter_mut()

.filter(|p| p.kind == Kprobe || p.kind == Kretprobe)

{

println!(“Program: {}, {:?}”, prog.name, prog.kind);

prog.attach_probe().unwrap();

}

self.bind_perf(backends)

[/rust]

Perf events

Transferring a large amount of trace data from the kernel can be done primarily two ways: aggregate it in kernel maps, and then periodically dump them in userspace, or user perf events to stream them in real-time from the kernel, using a ring buffer. Perf events do not require a syscall, so, if for instance, we would like to aggregate data based on arbitrary properties, which we do, it would be easier to pump out the raw event stream to userland and only then do the aggregation. This has the peculiar result of seemingly high CPU use in top, but it comes with very low instruction count per cycle, which means that our program is mostly waiting on memory access, as explained in Brendan Gregg’s blog post.

The perf event interface in RedBPF was designed so that we can use a single epoll loop to listen to every perf event source. This also means that for improved throughput, we could even start an epoll thread for every CPU.

let online_cpus = cpus::get_online().unwrap();

let mut output: Vec

for ref mut m in self.module.maps.iter_mut().filter(|m| m.kind == 4) {

for cpuid in online_cpus.iter() {

let pm = PerfMap::bind(m, -1, *cpuid, 16, -1, 0).unwrap();

output.push(Box::new(PerfHandler {

name: m.name.clone(),

perfmap: pm,

callback: T::get_handler(m.name.as_str()),

backends: backends.to_vec(),

}));

}

}

output

}

[/rust]

The above code sample will generate a PerfMap object for every online CPU, since in the kernel we also organize data on a per-CPU basis. In fact, PerfHandler can be found in ingraind, not RedBPF, and is a simple wrapper around reading the ring buffer. The interesting bit looks like this:

while let Some(ev) = self.perfmap.read() {

match ev {

Event::Lost(lost) => {

println!(“Possibly lost {} samples for {}”, lost.count, &self.name);

}

Event::Sample(sample) => {

let msg = unsafe {

(self.callback)(slice::from_raw_parts(

sample.data.as_ptr(),

sample.size as usize,

))

};

msg.and_then(|m| Some(send_to(&self.backends, m)));

}

}

}

[/rust]

Overall, RedBPF tries to find a balance between low level enough so you get a grasp of what’s going on, but high level enough to get out of the way and allow the user to focus on what they need to.

ingraind

Along with the RedBPF library, today we are releasing

The backbone of this pipeline is the great actix library. Raw data originating from the BPF probes is picked up by an event handler, turned into a safe data structure, and then passed into the actor event loop. It travels through a pipe of what we call “aggregators” and ends up at a “backend”.

All of these are implemented as actors, and the chaining of them can be defined in the configuration file. The following example tracks TLS handshakes across all IPv4 ports, and file access everywhere on the root filesystem. It whitelists the process, s_ip, d_port, and path tags, which may or may not make sense for every measurement, then replaces the dynamic process names generated by Docker’s userland proxy to a uniform one. The destination is a S3 bucket, and the AWS credentials are taken through command line.

monitor_dirs = [“/”] [[probe]] pipelines = [“s3”] [probe.config] type = “TLS” [pipeline.s3.config] backend = “S3” [[pipeline.s3.steps]] type = “Whitelist”

allow = [“process”, “s_ip”, “d_port”, “path”] [[pipeline.s3.steps]] type = “Regex”

patterns = [

{ key = “process”, regex = “conn\\d+”, replace_with = “docker_conn_proxy”},

] [/ini]

Conclusion

We believe that the architecture and unique features of RedBPF and ingraind are making them a powerful combination for security monitoring. In the future, there’s definitely some room for improvement around the ergonomics of sharing data structures between BPF code and Rust, and there’s always more room for optimization.

To make monitoring containerized environments even easier, we will be adding container awareness to the agent shortly.

We are excited to give back something to the amazing Rust community and to hear feedback, issues, and contributions to provide a one-stop solution for production security monitoring. So feel free to get in touch with us below! Happy hacking!