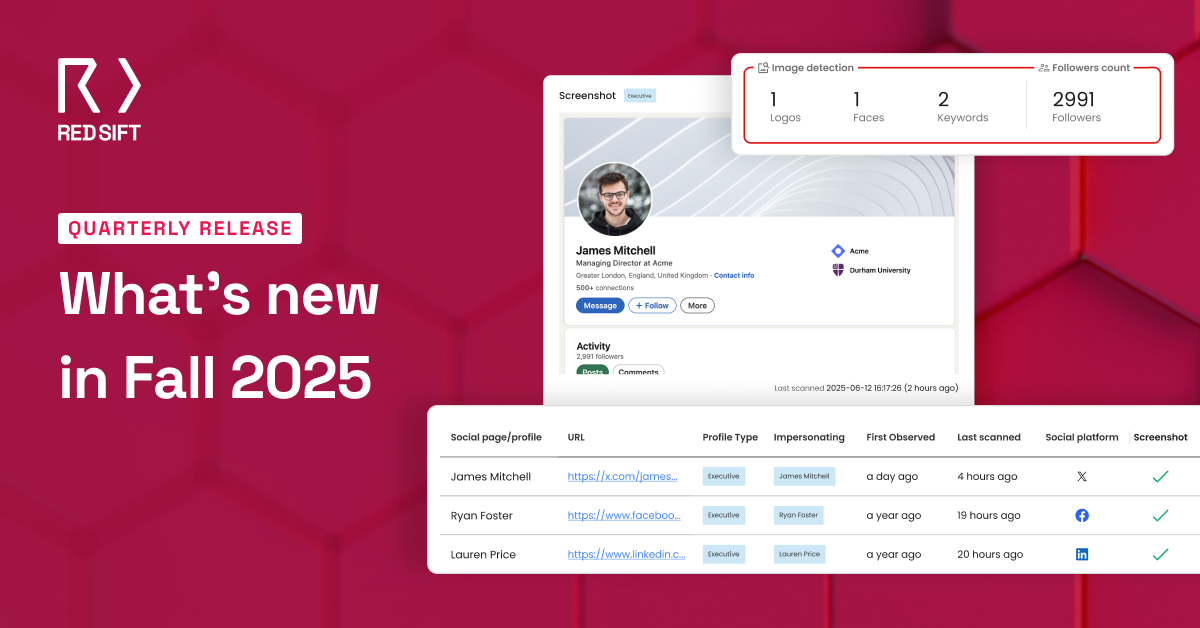

Executive Summary: Accurate logo detection is essential for protecting brands against misuse and fraudulent activities. Red Sift’s hybrid AI approach enhances detection precision, effectively balancing the reduction of false positives with the identification of genuine threats.

This article:

- Explores the significance of precise logo detection in safeguarding brands.

- Introduces Red Sift’s innovative hybrid AI method to boost detection accuracy.

- Emphasizes balancing the reduction of false positives with the identification of genuine threats.

Introduction

Logo detection is crucial for brand protection, helping identify logo misuse in lookalike domains and fraudulent activities. Detecting true logo appearance while minimizing false positives is equally essential – false positives waste resources, trigger unnecessary enforcement actions, and obscure genuine threats.

At Red Sift, our Brand Trust product combats brand abuse, fraud, and lookalike attacks by discovering and monitoring fraudulent domains imitating legitimate brands. Accurate logo detection is key to providing reliable insights and actionable intelligence. To enhance accuracy, we explore various techniques, including combining narrow AI object detection models with generative AI models. In this blog post, we describe our hybrid AI approach and share insights from our experiments.

Integrating real-time detection with Generative AI verification

Logo detection models are often trained using object detection technology, enabling them to identify brand symbols across a variety of images. To handle large-scale requests efficiently, real-time algorithms are preferred, balancing speed and accuracy. Notable techniques such as YOLO, EfficientDet, and RT-DETR have emerged as leading solutions in this space. While these models achieve impressive performance, they can still struggle with ambiguous cases where logos appear in complex backgrounds, are partially occluded, or resemble other shapes.

To enhance our logo detection process and reduce false positives, we introduce an additional verification step. After a logo is detected with our existing model, a Large Multimodal Model (LMM) is used to compare the detected logo against a reference logo. LMMs extend traditional Large Language Models (LLMs) by incorporating vision capabilities, allowing them to analyze images alongside text. Since only a small fraction of domains contain the logo of interest, the volume of this verification step remains manageable. While LMM-based verification is slower compared to real-time detection models, its improved accuracy justifies the additional processing time.

Technical overview: How LMM-based verification works

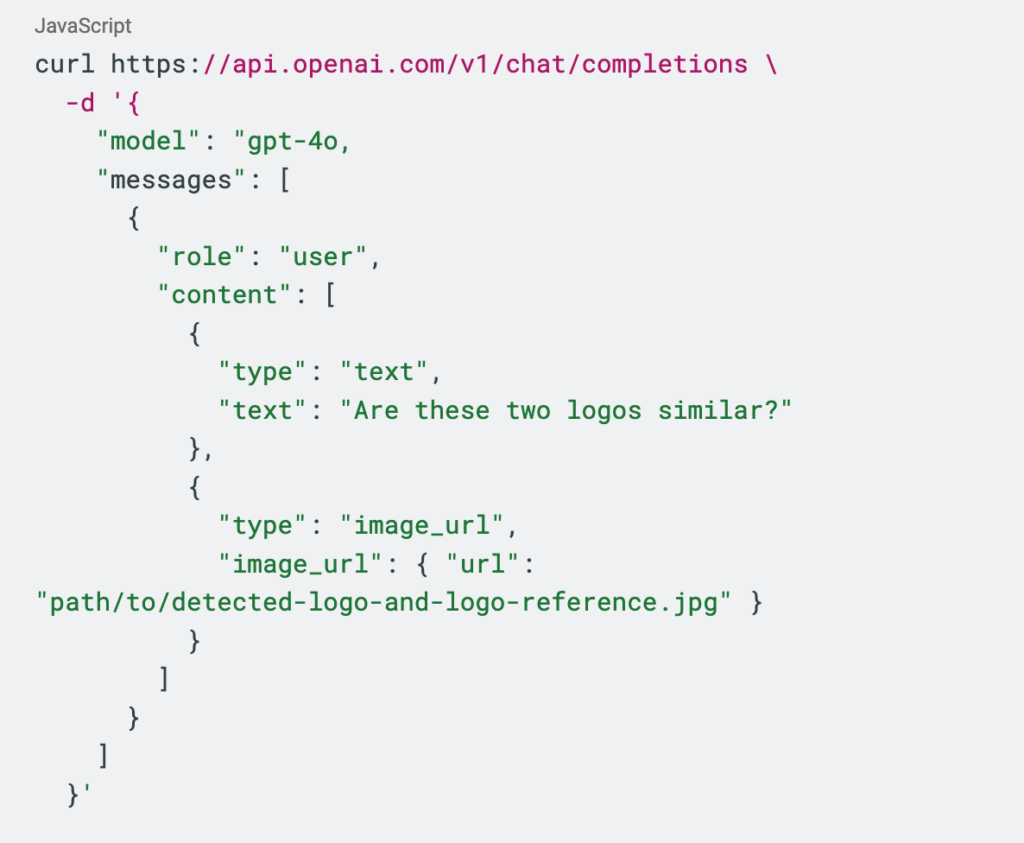

Generative AI has made it really simple to use cutting-edge technology. At its core, our approach involves sending a prompt with an image to a multimodal model, which then analyzes the input and determines whether the detected logo matches a reference. Here’s an example of how we can send such a request to an OpenAI’s endpoint performing this verification:

This simple API call demonstrates how effortlessly we can integrate generative AI into our pipeline for verification tasks. However, while the fundamental approach is straightforward, optimizing it for real-world performance is far more complex. We take a data-oriented approach, building a comprehensive test dataset with an equal number of matching and non-matching logo verifications. This allows us to systematically fine-tune various parameters, including:

- Prompt Optimization: Refining how we instruct the model to assess logo similarity, such as adjusting conditions related to color and taglines to improve recall.

- Image Composition: Experimenting with different ways to present the reference logos and the detected logo in a single image or multiple images.

- Test-Time Compute Strategy: Determining the optimal way for the model to “think” before making a decision.

- Model Selection: Evaluating different generative AI models to identify the best-performing solution.

Through extensive experimentation, we have gained valuable insights into how to fine-tune these aspects effectively. In the next section, we share our key findings from the two later experiments: test-time compute strategy and model selection.

Experiment 1: Test-time compute strategy

Recent research suggests that increasing test-time computation can enhance the accuracy of LLM-based models by allowing more time for reasoning (source). We tested this concept by evaluating three different response strategies:

- Result Alone: The model provides a direct answer without additional information.

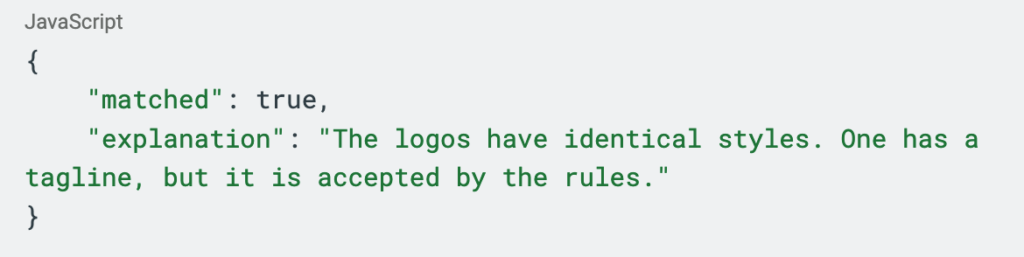

- Result → Explanation: The model provides an answer followed by an explanation.

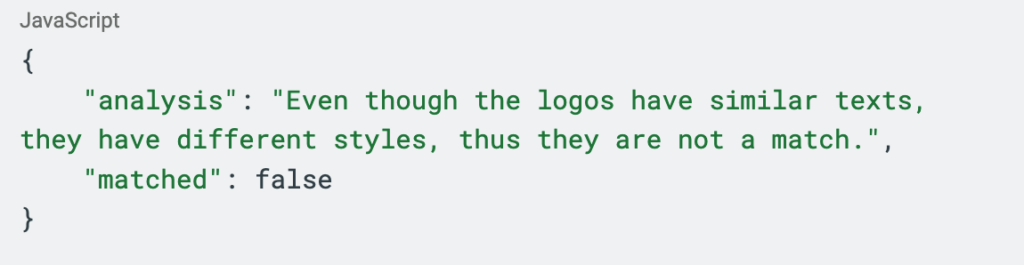

- Analysis → Result: The model generates an analysis before providing a decision.

We used the GPT-4o model and ran the test three times with the above strategies. The results were consistent and quite surprising: the Analysis → Result strategy performed the worst, while there was little difference between the other ones. In this logo verification task, giving the model more time to think didn’t help and even slightly reduced the accuracy.

Experiment 2: Large Multimodal Models

There are several LMMs available today, spanning both commercial and open-source solutions. To evaluate their performance, we benchmarked a diverse set of models with varying sizes and architectures from multiple providers:

- GPT-4o and GPT-4o-mini: two vision models from OpenAI

- Claude 3.7 Sonnet: the latest version of the most intelligent model from Anthropic with extended thinking support

- Gemini 2.0 Flash and Flash-Lite: recently released Flash 2.0 model family from Google Gemini

- Llama 3.2 90B: the largest model that supports vision from Meta

- Qwen2.5-VL 7B: one of the most well-regarded open-source models from Alibaba

We made a few observations:

- Among the three flagship models from OpenAI, Google, and Anthropic, GPT-4o was the definitive leader in accuracy. Claude 3.7 came last but when enabling extended thinking with up to 1024 tokens, it delivered a significant improvement, closing the gap with GPT-4o.

- Among the smaller models, GPT-4o-mini was better than Gemini 2.0 Flash-Lite, both significantly surpassing the 90B-parameter open-source model Llama3.2.

- The most impressive result was delivered by the Qwen2.5-VL model with an outstanding performance for a 7B parameter model.

Experiment 3: Fine-tuned open source LMMs

The data

Inspired by the performance of the Qwen model, we decided to fine-tune open-source LMMs for this specific task to see how well they could perform. To prepare the instruction dataset, we ensured that logos used in training did not appear in the test set, preventing information leakage or overfitting. We created instruction-response pairs, testing two approaches: using only the result in the response versus an Analysis → Result approach. Since a result alone offers little learning signal, we hypothesized that Analysis → Result responses would be more effective.

We first experimented with the smallest available model, Qwen2.5-VL 3B, to compare the two approaches. The baseline, without fine-tuning, failed to provide meaningful predictions, defaulting to a single value for all test data. Fine-tuning with results alone achieved 0.75 accuracy, while fine-tuning with Analysis → Result improved performance to 0.83, confirming that structured reasoning helps the model learn better.

The models

With the dataset finalized, we moved to model tuning. LoRA, a fine-tuning method that adds small adapter layers to a frozen model, allows efficient training without modifying the original weights. QLoRA further improves memory efficiency through quantization, enabling us to fine-tune the 7B model on a T4 GPU. Fine-tuning Qwen2.5-VL 7B yielded a score of 0.935, surpassing models like Gemini 2.0 Flash. We also fine-tuned Llama 3.2 11B, achieving 0.88 – significantly better than the out-of-the-box 90B version benchmarked earlier.

Finally, we fine-tuned Qwen2.5-VL 7B without quantization using an A100-40GB GPU for maximum performance. This final push resulted in a score of 0.965—matching GPT-4o. Achieving GPT-4o-level performance with a specialized 7B model is a remarkable feat, demonstrating the potential of targeted fine-tuning.

Conclusion

By combining traditional logo detection models with LMM-powered verification, we have developed a more robust approach to identifying logos with high confidence. This hybrid method helps mitigate false positives and improves the reliability of our AI-driven solutions. At Red Sift, we continue to push the boundaries of AI research, exploring innovative ways to improve accuracy and performance across various domains. We’ve learned so much during the process and we look forward to developing innovative solutions that empower our customers to better secure their digital presence. If you’d like to learn more, reach out to the team!